Friday, March 13, 2015

YouTube Google Forms and Apps Script BFFs

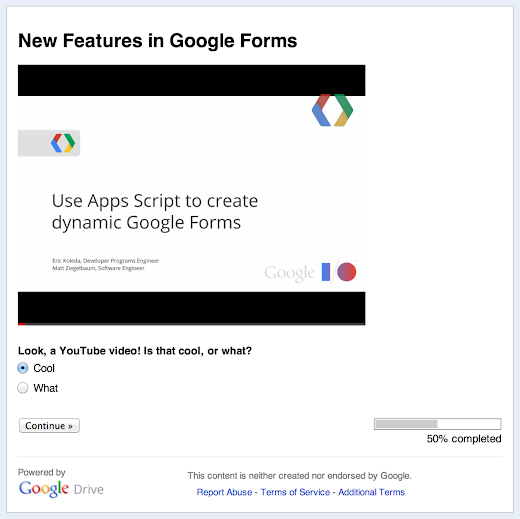

Last month, we announced several new ways to customize Google Forms. As of this week, three of those options are also available in forms created from Apps Script — embedding YouTube videos, displaying a progress bar, and showing a custom message if a form isn’t accepting responses.

Adding a YouTube video is as simple as any other Google Forms operation in Apps Script — from the Form object, just call addVideoItem(), then setVideoUrl(youtubeUrl). Naturally, you can also control the video’s size, alignment, and so forth.

To show a progress bar, call setProgressBar(enabled). Don’t even need a second sentence to explain that one. The custom message for a form that isn’t accepting responses is similarly easy: setCustomClosedFormMessage(message), and you’re done.

Want to give it a try yourself? Copy and paste the sample code below into the script editor at script.google.com, then hit Run. When the script finishes, click View > Logs to grab the URL for your new form, or look for it in Google Drive.

function showNewFormsFeatures() {

var form = FormApp.create(New Features in Google Forms);

var url = form.getPublishedUrl();

form.addVideoItem()

.setVideoUrl(http://www.youtube.com/watch?v=38H7WpsTD0M);

form.addMultipleChoiceItem()

.setTitle(Look, a YouTube video! Is that cool, or what?)

.setChoiceValues([Cool, What]);

form.addPageBreakItem();

form.addCheckboxItem()

.setTitle(Progress bars are silly on one-page forms.)

.setChoiceValues([Ah, that explains why the form has two pages.]);

form.setProgressBar(true);

form.setCustomClosedFormMessage(Too late — this form is closed. Sorry!);

// form.setAcceptingResponses(false); // Uncomment to see custom message.

Logger.log(Open this URL to see the form: %s, url);

}  | Dan Lazin profile | twitter Dan is a technical writer on the Developer Relations team for Google Apps Script. Before joining Google, he worked as video-game designer and newspaper reporter. He has bicycled through 17 countries. |

Thursday, March 12, 2015

GQueues Mobile a case for the HTML5 web app

With the proliferation of mobile app stores, the intensity of the native app vs. web app debate in the mobile space continues to increase. While native apps offer tighter phone integration and more features, developers must maintain multiple apps and codebases. Web apps can serve a variety of devices from only one source, but they are limited by current browser technology.

In the Google IO session HTML5 versus Android: Apps or Web for Mobile Development?, Google Developer Advocates Reto Meier and Michael Mahemoff explore the advantages of both strategies. In this post I describe my own experience as an argument that an HTML5 app is a viable and sensible option for online products with limited resources.

Back in 2009 I started developing GQueues, a simple yet powerful task manager that helps people get things done. Built on Google App Engine, GQueues allows users to log in with Gmail and Google Apps accounts, and provides a full set of features including two-way Google Calendar syncing, shared lists, assignments, subtasks, repeating tasks, tagging, and reminders.

While I initially created an “optimized” version of the site for phone browsers, users have been clamoring for a native app ever since its launch two years ago. As the product’s sole developer, with every new feature I add, I consider quite carefully how it will affect maintenance and future development. Creating native apps for iOS, Android, Palm, and Blackberry would not only require a huge initial investment of time, but also dramatically slow down every new subsequent feature added, since each app would need updating. If GQueues were a large company with teams of developers this wouldn’t be as big an issue, although multiple apps still increase complexity and add overhead.

After engaging with users on our discussion forum, I learned that when they asked for a “native app,” what they really wanted was the ability to manage their tasks offline. My challenge was clear: if I could create a fast, intuitive web app with offline support, then I could satisfy users on a wide variety of phones while having only one mobile codebase to maintain as I enhanced the product.

Three months ago I set out to essentially rewrite the entire GQueues product as a mobile web app that utilized a Web SQL database for offline storage and an Application Cache for static resources. The journey was filled with many challenges, to say the least. With current mobile JavaScript libraries still growing to maturity, I found it necessary to create my own custom framework to run the app. Since GQueues data is stored in App Engine’s datastore, which is a schema-less, “noSQL” database, syncing to the mobile SQL database proved quite challenging as well. Essentially this required creating an object relational mapping layer in JavaScript to sit on top of the mobile database and interface with data on App Engine as well as input from the user. As a bonus challenge, current implementations of Web SQL only support asynchronous calls, so architecting the front-end JavaScript code required a high use of callbacks and careful planning around data availability.

During development, my test devices included a Nexus S, iPhone, and iPad. A day before launch I was delighted to find the mobile app worked great on Motorola Xoom and Samsung Galaxy Android tablets, as well as the Blackberry Playbook. This fortuitous discovery reaffirmed my decision to have one codebase serving many devices. Last week I launched the new GQueues Mobile, which so far has been met with very positive reactions from users – even the steadfast “native app” proponents! With a team of developers I surely could have created native apps for several devices, but with my existing constraints I know the HTML5 strategy was the right decision for GQueues. Check out our video and determine for yourself if GQueues Mobile stacks up to a native app.

| Cameron Henneke Cameron Henneke is the Founder and Principal Engineer of GQueues Cameron is based in Chicago and loves Python and JavaScript equally. While not coding or answering support emails, he enjoys playing the piano, backpacking, and cheering on the Bulls. |

Want to weigh in on this topic? Discuss on Buzz

What is your top 1 agile tip AgileVancouver

@lucisferre - "Working towards continuous delivery"

@dbelcham - "Be agile w/ agile practices. Adopt what works"

@mikeeedwards - "One step at a time. Find small wins"

unknown - "Adopt pair programming"

Angel from Spain - "Make the change come from them - get them to see the problem and come up with the improvement"

@Ang3lFir3 - "Cant do it without the right people. One bad egg spoils the whole bunch. Get the right people on the bus"

@dwhelan - "Find the bottlneck in your value flow and cut it in half"

@srogalsky - "Uncover better ways. Never stop learning. You are never finished being agile"

@mfeathers - "Dont forget about the code or it will bury you. It will $%#ing bury you"

@robertreppel - "Recognize your knowledge gaps and bring in help if you need it"

@jediwhale - "Pull the caps lock key off your keyboard"

Next time Im in a panel, the question will be: "I love agile because..." Feel free to comment with your answers.

Wednesday, March 11, 2015

Integrating Balsamiq Mockups with Google Drive

Editor’s Note: This blog post is authored by Peldi Guilizzoni, from Balsamiq. As a user of Balsamiq myself, it was great to see them join as one of the first Drive apps! -- Steven Bazyl

Hi there! My name is Peldi and I am the founder of Balsamiq, a small group of passionate individuals who believe work should be fun and that lifes too short for bad software.

We make Balsamiq Mockups, a rapid wireframing tool that reproduces the experience of sketching interfaces on a whiteboard, but using your computer, so they’re easier to share, modify, and get honest feedback on. Mockups look like sketches, so stakeholders won’t get distracted by little details, and can focus on what’s important instead.

We sell Mockups as a Desktop application, a web application and as a plugin to a few different platforms. An iPad version is also in the works.

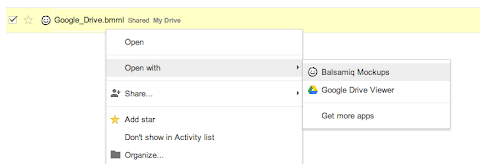

We believe that tools should adapt to the way people like to work, not the other way around. Thats why when Google Drive came out, we jumped at the chance to integrate Mockups with it. This is the story of how the integration happened.

First of all, a little disclaimer. Although my job these days is to be CEO and all that, I come from a programming background. I started coding at 12, worked at Macromedia/Adobe for 6 years as a programmer. Id say Im a pretty good programmer…just a bit rusty, ok? I realize that the decision to write the code for Mockups for Google Drive myself instead of asking one of Balsamiqs better programmers to do it might have been a bit foolish, but we really wanted to be a launch partner and the programmers were already busy with lots of other stuff, plus I didnt want to pass up on the chance to work on something cool after dinner for a while. ;) OK so now you know the background, lets get started.

Once I got access to the Google Drive API documentation and looked around a bit, I started by following the detailed "sample application" tutorial.

The sample was written in python, used OAuth, the Google API Console, and ran on Google App Engine, all technologies I hadnt been exposed to before.

Following along brought me back to my childhood days of copying programs line by line from PC Magazine, not really understanding what I was doing but loving it nonetheless. :)

The trickiest part was figuring out how OAuth worked: its a bit of a mess, but after you play with it a little and read a few docs, it starts making sense, stick with it, its the future! ;) Plus the downloadable sample app had hidden all that stuff in a neat little library, so you dont have to worry about it so much.

Setting up the sample application took around 2-3 hours, easy peasy. Once that was done, I just had to convert it to become Balsamiq Mockups for Google Drive. Because I had done this before for other platforms, this was finally something I was comfortable with doing. The bulk of our code is encapsulated into our Flash-based Mockups editor, so all I had to do was to write a few functions to show the editor to the user and set it up using our internal APIs. Then I had to repurpose the "open with" and "edit" APIs from the sample app to work with the Mockups editor. All and all, this took maybe a day of work. Yay!

Once the proof of concept was up, I started turning the code into a real app. I cleaned up the code, added some comments, created a code repository for it in our bazaar server, set up a staging environment (a parallel Google App Engine application and unpublished Chrome Web Store listing) and integrated the build and deployment into our Jenkins server.

One tricky bit worth mentioning about integrating with Jenkins: the Google App Engine deployment script appcfg.py asks for a password interactively, which is a problem if you want to deploy automatically. The solution was to use the echo pwd | appcfg.py trick found here.

After some more testing and refinements, shipping day came, Balsamiq Mockups for Google Drive was live.

It was a very exciting day. Getting mentioned in the official Google blog was quite awesome. The only stressful moment came because for some reason my Google App Engine account was not set up for payments (I could have sworn I had done it in advance), so our app went over our bandwidth quota an hour after launch, resulting in people receiving a white blank screen instead of the app. Two people even gave us bad reviews because of it. Boo! :(

In the days that followed, things went pretty well. People started trying it out, and only a few bug reports came. One very useful Google App Engine feature is the "errors per second" chart in the dashboard, which gives you an insight on how your app is doing.

I noticed that we had a few errors, but couldnt figure out why. With the help of the docs and our main Developer Relations contact at Google, we narrowed them down to a couple of OAuth issues: one was that the library I was using didnt save the refresh_token properly, and another that had to do with sessions timing out when people use the editor for over an hour and then go to save their work.

Fixing these bugs took way longer than what I wanted, mostly due to the fact that Im a total OAuth and Python n00b.

After a few particularly frustrating bug hunting sessions, I decided to rewrite the backend to Java. The benefits of this approach are that a) we get static type checking and b) I can get help from some of our programmers since Java is a language were all already familiar with here.

Since by now the Java section of the Google Drive SDK website had been beefed up, the rewrite only took a day, and it felt awesome. Sorry python, I guess Im too old for you.

The hardest part of the java rewrite was the Jenkins integration, since the echo pwd trick doesnt work with the java version of appcfg. To get around that, I had to write an Expect script, based on this Fábio Uechi blog post. By the way, I would recommend reading the Expect README, it has an awesome 1995 retro feel to it.

Overall, integrating Balsamiq Mockups with Google Drive was a breeze. Google is a technology company employing some of the brightest people in our industry, and it shows. The APIs are clean and extremely well tested. The people at Google are very responsive whenever I have an issue and have been instrumental in making us successful.

While the application is still pretty young - we are working on adding support for Drive images, linking, symbols… - we are very happy with the results were getting already. The Drive application netted around $2,500 in its first full month of operation, and sales are growing fast.

Alright, back to coding for me, yay! :)

Peldi

| Peldi Guilizzoni Giacomo Peldi Guilizzoni is the founder and CEO of Balsamiq, makers of Balsamiq Mockups. Balsamiq is a tiny, ten-person multi-million dollar company with offices in 4 cities around the World. A programmer turned entrepreneur, Peldi loves to learn and to share what he learns, be it via his blog, giving talks or mentoring other software startups. Follow him on Twitter @balsamiq. |

AdWords Analysis in Google Apps Script

Editor’s Note: Guest author David Fothergill works at QueryClick, a search-engine marketing company based in the UK. — Eric Koleda

Working in Paid Search account management, Ive often found tremendous wins from making reports more useful and efficient. Refining your analytics allows you to streamline your workflow, allowing more time for strategic and proactive thinking — and thats what were paid for, not endless number-crunching.

The integration between Google Analytics and Apps Script has opened up lots of opportunities for me to make life easier through automation. In a recent blog post on my agencys website, I outlined how an automated report can quickly “heatmap” conversion rate by time and day. The aim of the report is to provide actionable analysis to inform decisions on day-part bidding and budget strategies.

In that post, I introduce the concepts and provide the scripts, sheet, and instructions to allow anyone to generate the reports by hooking the scripts up to their own account. Once the initial sheet has been created, the script only requires the user to provide a Google Analytics profile number and a goal for which they want to generate heatmaps. In this post, we’ll break down the code a bit.

Querying the API

This is a slight amendment to the code that queries the Core Reporting API. Apart from customising the optArgs dimensions to use day and hour stats, I have modified it to use goal data from the active spreadsheet, because not all users will want to measure the same goals:

function getReportDataForProfile(ProfileId, goalNumber) {

//take goal chosen on spreadsheet and select correct metric

var tableId = ga: + ProfileId;

if (goalNumber === eCommerce Trans.) {

var goalId = ga:Transactions ;

} else {

var goalId = ga:goal + goalNumber + Completions;

}

// Continue as per example in google documentation ...

}

Pivoting the Data

Once we’ve brought the Google Analytics data into the spreadsheet in raw form, we use a pivot table to plot the hour of the day against the day of the week.

For this type of report, Id like to use conditional formatting to heatmap the data — but conditional formatting in Google Sheets is based on fixed values, whereas we want the thresholds to change based on cell values. However, thanks to the flexibility of scripts, I was able to achieve dynamic conditional formatting.

Conditional Formatting Using Scripts

The script needs to know the boundaries of our data, so I’ve set up several cells that display the maximums, minimums, and so forth. Once these were in place, the next step was to create a function that loops through the data and calculates the desired background color for each cell:

function formatting() {

var sheet = SpreadsheetApp.getActiveSpreadsheet().

getSheetByName(Heatmap);

var range = sheet.getRange(B2:H25);

range.setBackgroundColor(white);

var values = range.getValues()

//get boundaries values for conditional formatting

var boundaries = sheet.getRange(B30:B35).getValues();

//get range to heatmap

var backgroundColours = range.getBackgroundColors();

for (var i = 0; i < values.length; i++) {

for (var j = 0; j < values[i].length; j++) {

// Over 90%

if (values[i][j] > boundaries[1][0]) {

backgroundColours[i][j] = #f8696b;

}

// Between 80% and 90%

if (values[i][j] < boundaries[1][0]

&& values[i][j] >= boundaries[2][0]) {

backgroundColours[i][j] = #fa9a9c;

}

// Between 60% and 80%

if (values[i][j] < boundaries[2][0]

&& values[i][j] >= boundaries[3][0]) {

backgroundColours[i][j] = #fbbec1;

}

// Between 40% and 60%

if (values[i][j] < boundaries[3][0]

&& values[i][j] >= boundaries[4][0]) {

backgroundColours[i][j] = #fcdde0;

}

// Between 20% and 40%

if (values[i][j] < boundaries[4][0]

&& values[i][j] >= boundaries[5][0]) {

backgroundColours[i][j] = #ebf0f9;

}

// Less than 20%

if (values[i][j] < boundaries[5][0]) {

backgroundColours[i][j] = #dce5f3;

}

}

}

// set background colors as arranged above

range.setBackgroundColors(backgroundColours);

}

Calling the functions based on the profile ID and goal number specified in the main sheet gives us a quick, actionable report that can easily be adapted for use across multiple accounts.

function generateHeatmap() {

try {

var profileId = SpreadsheetApp.getActiveSpreadsheet()

.getSheetByName(Heatmap).getRange(4,10).getValue();

var goalNumber = SpreadsheetApp.getActiveSpreadsheet()

.getSheetByName(Heatmap).getRange(7,10).getValue();

if (profileId === ) {

Browser.msgBox(Please enter a valid Profile ID);

} else {

var results = getReportDataForProfile(profileId, goalNumber);

outputToSpreadsheet(results);

formatting();

}

} catch(error) {

Browser.msgBox(error.message);

}

}

This was my first foray into the slick integration between the Core Reporting API and spreadsheets, but has proven a valuable test case for how effective it will be to roll this method of reporting into our daily process of managing accounts.

We have now started the next steps, which involves building out “client dashboards” that will allow account managers access to useful reports at the press of a button. This moves us toward the goal of minimizing the time gathering and collating data, freeing it up to add further value to client projects.

Editors Note: If youre interested in further scripting your AdWords accounts, take a look at AdWords Scripts, a version of Apps Script thats embedded right into the AdWords interface.

| David Fothergill profile | twitter | LinkedIn Guest author David Fothergill is a Project Director at the search marketing agency QueryClick, focusing on Paid Search and Conversion Optimisation. He has been working in the field for around 8 years and currently handles a range of clients for the company, in verticals ranging from fashion and retail through to industrial services. |

Google Spreadsheet as Database

Long time ago, I didnt know databases, but I knew Quattro Pro, and later the amazing Excel. At that time I was sure you can do anything with Excel. Of course, later I discovered Access and afterwards SQL. Bye bye Excel and "table" as a database.

Today Im sure that any serious company needs a real database of some kind. Which is technically the best I dont know and will leave the answer to masters of the database world. But what I found out today is, that spreadsheets are not gone. Well, at least in a very specific situation Google Spreadsheets might be the best solution. And quite a useful one.

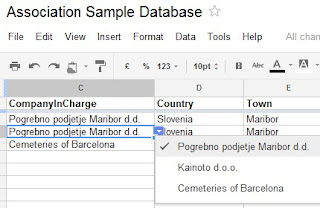

Example is real, but the data is a bit changed. This is the spreadsheet sample. Three things are really important:

1. "Dropdown" fields. As you can see in many fields, there are Dropdown fields that are being updated from another Sheets:

How do you create these fields? In any cell you can go to Data => Validate data as described in help.

This means you can actually get data from any sheet, any list you might be having (and updating). It is actually a "basic relationship among tables". Well, I didnt test any cascading, but it is not called "Google database", just "Google Spreadsheets". :-)

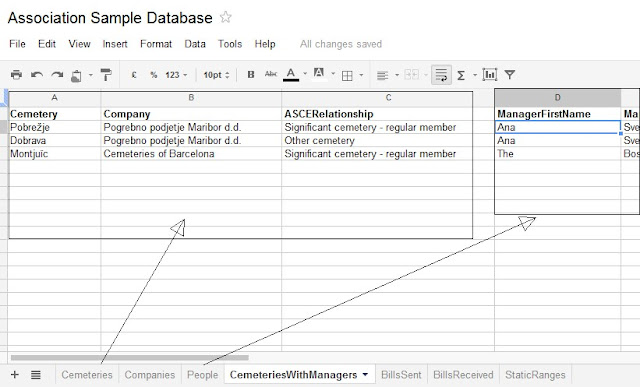

2. QUERY

There might not be any relationships, but you can actually "Query" things around and combine results from several tables. Amazingly you can even do these among different documents, as described here in details.

If you look at my example, you can see a Sheet named CemeteriesWithManagers. This Sheet actually combines results from Cemeteries and Managers sheets with 2 simple queries.

In A2 field:

=QUERY(Cemeteries!B2:I500, "SELECT B, C, H")

In D2 field:

=QUERY(People!B$2:E$500, "SELECT B WHERE (D= """&B2&""" AND E = General manager) ")

OK, they might not be the simplest and are explained in details in the link up there. But just 2 queries actually bring a "View" that can be very useful and even put in some iframe as pure html.

There was something in this second query that took me some time to find and can save you some time. It is the criteria:

D= """&B2&"""

This is not easily found. It is quite logical to have a criteria "dynamic", not fixed. So this line up here actually finds the name in the "People" sheet from the value that is in the results sheet in column B (at the same row).

3. Some calculations

There is a SUM field in "Companies" sheet that goes through Invoices (BillsSent or BillsReceived) and calculates values based upon company name.

=SUM(FILTER(BillsSent!H:H;BillsSent!B:B=B2))

Any experienced programmer will know the problems and limitations of this kind of "databases". But the solution is not intended for big systems with hundreds of thousands of possible problems and issues. It is intended for specific, but not rare cases where client is a dynamic organisation that is managed by few people living far appart, has small scope of work, but on the other has to have things organised. Might be a local charity, international association, poets group or something quite extraordinary that has a very unknown future.

Most of all, as everything else in the internet, these possibilities and solutions will most probably be used in very odd cases again and again. :-)

Get your Google Drive App listed on the Google Apps Marketplace

Today, we want to share with you four easy steps to get listed immediately and enable admins to install your application for all users in their domain. For more details, check out the Google Apps Marketplace documentation.

Step 1: Open your Drive project on Google Cloud console. Turn on “Google Apps Marketplace SDK” for your project.

Step 2: Click the gear icon to configure “Google Apps Marketplace SDK”. Refer to Google Apps Marketplace documentation for details. In the scopes section, be sure to request the same scopes as your Google Drive application, and check the “Enable Drive extension” checkbox.

Step 3: Go to the Chrome Web Store developer console and select your published Drive application.

Step 4: Update the following line in your Drive application’s Chrome Web Store manifest, upload and publish.

"container":"GOOGLE_DRIVE"

with

“container”: [”GOOGLE_DRIVE”, ”DOMAIN_INSTALLABLE”]

You’re done! You application is now available to all Google Apps for Work customers to install on a domain-wide basis through the Google Apps Marketplace. Refer to Publishing your app documentation for details. You can access Google Apps Marketplace inside Google Admin Console and verify your newly listed application.

Please subscribe to the Google Apps Marketplace G+ community for the latest updates.

Posted by Hiranmoy Saha, Software Engine, Google Apps Marketplace

Google Drive Hackathon in Tel Aviv Israel

Hey Tel Aviv developers!

We are organizing a hackathon focusing on Google Drive next week. If you’d like to learn more about the Google Drive SDK and have fun developing your first Google Drive application, join us there!

The event will take place at the Afeka Tel Aviv Academic College of Engineering. We’ll start with an introduction to the Google Drive SDK at 17:30 on Tuesday September 4th 2012 and the hackathon will run through the whole of the next day. We’ll also have some of the Android team to talk a bit about Android, so be sure to check that out. See the detailed agenda of this 2-day event.

Don’t forget to register and have a look at this document to help you prepare.

See you there!

| Nicolas Garnier profile | twitter | events Nicolas Garnier joined Google’s Developer Relations in 2008 and lives in Zurich. He is a Developer Advocate focusing on Google Apps and Web APIs. Before joining Google, Nicolas worked at Airbus and at the French Space Agency where he built web applications for scientific researchers. |

Tuesday, March 10, 2015

Managing tasks and reminders through Google Apps Script

Editor’s Note: Guest author Romain Vialard works at Revevol, an international service provider dedicated to Google Apps and other Cloud solutions. -- Arun Nagarajan

There are many tools available to help you manage a task list and Google Apps comes with its own simple Tasks app. But sometimes it is more convenient and collaborative to simply manage your task list in a shared spreadsheet. This spreadsheet can be a simple personal task list or a project-management interface that requires team-wide coordination.

Google Spreadsheets come with a set of notification rules that might come in handy. For example, you can be notified each time someone adds a new task to the list or each time the status of a task is updated. Furthermore, it is very easy add to add basic reminders through Apps Script with just a few lines of code:

function remindMe() {

var sheet = SpreadsheetApp.getActiveSpreadsheet().getSheets()[0];

var data = sheet.getDataRange().getValues();

for(var i = 1; i < data.length; i++){

if(data[i][2] > new Date()){

MailApp.sendEmail(message);

}

}

}

The simple remindMe function performs a standard JavaScript-based date comparison on every row and sends an email for tasks that are due. You can then schedule the remindMe function via a programmable trigger based on the settings.

This script is already available in the Script Gallery today. Try it out for yourself!

Once you have installed the script, you get a new menu option in the spreadsheet that opens a simple user interface to set the options you want. As a developer, you can extend this interface further to provide more options and capabilities.

| Romain Vialard profile | YouTube Romain Vialard is a Google Apps Change Management consultant at Revevol. Romain writes scripts to automate everyday tasks, add functionality and facilitate rapid adoption of cutting edge web infrastructures. As a Google Apps Script Top Contributor, he has also built many of the top scripts in the Apps Script Gallery, including the very popular Gmail Meter. |

Google Drive API Push Notifications

If your app needs to keep up with changes in Drive, whether to sync files, initiate workflows, or just keep users up to date with the latest info, you’re likely familiar with Drive’s changes feed. But periodic polling for changes has always required a delicate balance between resources and timeliness.

Now there’s a better way. With push notifications for the Drive API, periodic polling is no longer necessary. Your app can subscribe for changes to a user’s drive and get notified whenever changes occur.

Suppose your app is hosted on a server with my-host.com domain and push notifications should be delivered to an HTTPS web-hook https://my-host.com/notification:

String subscriptionId = UUID.randomUUID().toString();

Channel request = new Channel()

.setId(subscriptionId)

.setType("web_hook")

.setAddress("https://my-host.com/notification");

drive.changes().watch(request).execute();

As long as the subscription is active, Google Drive will trigger a web-hook callback at https://my-host.com/notification. The app can then query the change feed to catch up from the last synchronization point:

changes = service.changes().list()

.setStartChangeId(lastChangeId).execute();

If your app only needs to be notified about changes to a particular file or folder your app can watch just those files rather than the entire change feed.

If you are interested in using this new feature, please refer to the documentation at developers.google.com. You can see push notifications in action with the Push Notifications Playground and view the source at Github.

| Steven Bazyl profile | twitter Steve is a Developer Advocate for Google Drive and enjoys helping developers build better apps. |

Agile Testing a response to The Golden Rules of Testing

Rays words are in normal text below. My replies are italicized and in blue.

***************************

It’s all about finding the bug as early as possible:

- Start the software testing process by analyzing requirements long before development. I object to the word “long” here. It implies that we do big requirements up front. It also more than implies a process smell - the long gap between anaylsis and implementation. Rather, let’s take a look at a story together as a team right before development begins on that story to analyze the requirements and create our tests before we start coding. Then, repeat for the next story.

- Integration testing (performed by IT) performed by the team.

- System testing (performed by professional testers) performed by the team.

- Acceptance testing (performed by business users) performed by the team.

- First let me state this: Automated testing can be extremely useful and can be a real time saver. But it can also turn out to be a very expensive and an invalid solution. I tried to find more some information from Ray on what he means by automated testing but couldn’t find any additional info despite the fact that he has written a few blog posts about automated testing. This statement is usually delivered by someone who has attempted and struggled with automated UI testing. Automated UI testing can be more difficult and more expensive, but Im not sure how it is an "invalid solution". However, automated service testing is comparably simple, not expensive, not invalid, and a consistent time saver. Automated UI testing can still be valuable, but the ratio of service to UI tests should be heavily weighted toward service testing IMO.

- If you like to be instantly popular, don’t become a software tester! You’ll find out that you are going to meet a great deal of resistance. It is very likely that you will end up being the sole defender of quality at a certain point. Other participants in the project will be tempted to go for the deadline, whatever the quality of the application is. This is one of the reasons the agile testing community preaches a whole team approach to quality. Being the sole defender of anything on a project is a problem. We want our teams to own the budget and schedule, not just the PM. We want our teams to own quality, not just the tester, etc.

Allowing end users to install your app from Google Apps Marketplace

by Chris Han, Product Manager Google Apps Marketplace

If you have an app in the Google Apps Marketplace utilizing oAuth 2.0, you can follow the simple steps below to enable individual end users to install your app. If you’re not yet using oAuth 2.0, instructions to migrate are here.

1. Navigate to your Google Developer Console.

Tips on using the APIs Discovery Service

Our newest set of APIs - Tasks, Calendar v3, Google+ to name a few - are supported by the Google APIs Discovery Service. The Google APIs Discovery service offers an interface that allows developers to programmatically get API metadata such as:

- A directory of supported APIs.

- A list of API resource schemas based on JSON Schema.

- A list of API methods and parameters for each method and their inline documentation.

- A list of available OAuth 2.0 scopes.

The APIs Discovery Service is especially useful when building developer tools, as you can use it to automatically generate certain features. For instance we are using the APIs Discovery Service in our client libraries and in our APIs Explorer but also to generate some of our online API reference.

Because the APIs Discovery Service is itself an API, you can use features such as partial response which is a way to get only the information you need. Let’s look at some of the useful information that is available using the APIs Discovery Service and the partial response feature.

List the supported APIs

You can get the list of all the APIs that are supported by the discovery service by sending a GET request to the following endpoint:

https://www.googleapis.com/discovery/v1/apis?fields=items(title,discoveryLink)

Which will return a JSON feed that looks like this:

{

"items": [

…

{

"title": "Google+ API",

"discoveryLink": "./apis/plus/v1/rest"

},

{

"title": "Tasks API",

"discoveryLink": "./apis/tasks/v1/rest"

},

{

"title": "Calendar API",

"discoveryLink": "./apis/calendar/v3/rest"

},

…

]

}

Using the discoveryLink attribute in the resources part of the feed above you can access the discovery document of each API. This is where a lot of useful information about the API can be accessed.

Get the OAuth 2.0 scopes of an API

Using the API-specific endpoint you can easily get the OAuth 2.0 scopes available for that API. For example, here is how to get the scopes of the Google Tasks API:

https://www.googleapis.com/discovery/v1/apis/tasks/v1/rest?fields=auth(oauth2(scopes))

This method returns the JSON output shown below, which indicates that https://www.googleapis.com/auth/tasks and https://www.googleapis.com/auth/tasks.readonly are the two scopes associated with the Tasks API.

{

"auth": {

"oauth2": {

"scopes": {

"https://www.googleapis.com/auth/tasks": {

"description": "Manage your tasks"

},

"https://www.googleapis.com/auth/tasks.readonly": {

"description": "View your tasks"

}

}

}

}

}

Using requests of this type you could detect which APIs do not support OAuth 2.0. For example, the Translate API does not support OAuth 2.0, as it does not provide access to OAuth protected resources such as user data. Because of this, a GET request to the following endpoint:

https://www.googleapis.com/discovery/v1/apis/translate/v2/rest?fields=auth(oauth2(scopes))

Returns:

{}

Getting scopes required for an API’s endpoints and methods

Using the API-specific endpoints again, you can get the lists of operations and API endpoints, along with the scopes required to perform those operations. Here is an example querying that information for the Google Tasks API:

https://www.googleapis.com/discovery/v1/apis/tasks/v1/rest?fields=resources/*/methods(*(path,scopes,httpMethod))

Which returns:

{

"resources": {

"tasklists": {

"methods": {

"get": {

"path": "users/@me/lists/{tasklist}",

"httpMethod": "GET",

"scopes": [

"https://www.googleapis.com/auth/tasks",

"https://www.googleapis.com/auth/tasks.readonly"

]

},

"insert": {

"path": "users/@me/lists",

"httpMethod": "POST",

"scopes": [

"https://www.googleapis.com/auth/tasks"

]

},

…

}

},

"tasks": {

…

}

}

}

This tells you that to perform a POST request to the users/@me/lists endpoint (to insert a new task) you need to have been authorized with the scope https://www.googleapis.com/auth/tasks and that to be able to do a GET request to the users/@me/lists/{tasklist} endpoint you need to have been authorized with either of the two Google Tasks scopes.

You could use this to do some automatic discovery of the scopes you need to authorize to perform all the operations that your applications does.

You could also use this information to detect which operations and which endpoints you can access given a specific authorization token ( OAuth 2.0, OAuth 1.0 or Authsub token). First, use either the Authsub Token Info service or the OAuth 2.0 Token Info Service to determine which scopes your token has access to (see below); and then deduct from the feed above which endpoints and operations requires access to these scopes.

[Access Token] -----(Token Info)----> [Scopes] -----(APIs Discovery)----> [Operations/API Endpoints]

Example of using the OAuth 2.0 Token Info service:

Request:

GET /oauth2/v1/tokeninfo?access_token=HTTP/1.1

Host: www.googleapis.com

Response:

HTTP/1.1 200 OK

Content-Type: application/json; charset=UTF-8

…

{

"issued_to": "1234567890.apps.googleusercontent.com",

"audience": "1234567890.apps.googleusercontent.com",

"scope": "https://www.google.com/m8/feeds/

https://www.google.com/calendar/feeds/",

"expires_in": 1038

}

There is a lot more you can do with the APIs Discovery Service so I invite you to have a deeper look at the documentation to find out more.

| Nicolas Garnier profile | twitter | events Nicolas joined Google’s Developer Relations in 2008. Since then hes worked on commerce oriented products such as Google Checkout and Google Base. Currently, he is working on Google Apps with a focus on the Google Calendar API, the Google Contacts API, and the Tasks API. Before joining Google, Nicolas worked at Airbus and at the French Space Agency where he built web applications for scientific researchers. |

Monday, March 9, 2015

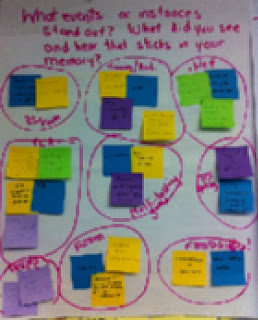

Day 4 at Agile2010

The session with Gerry Kirk and Michael Sahota was designed to create a knowledge base of methods and tools for doing agile readiness assessments. It was great to see ideas from other coaches and I look forward to the compiled results.

The conference party at Epcot was also a lot of fun and I enjoyed some non-agile time with my agile friends.

Google Apps Script opens new ways to deliver workflow solutions for businesses

Google Sites offers an incredible way to author and distribute content, and we use it extensively both for ourselves and our clients. Therefore it was not surprising that our customers started requesting a content approval workflow in Google Sites. Now with Google Apps Script, we have been able to develop Appogee Content Approval for Google Sites.

The Solution

Appogee Content Approval for Google Sites (ACA) can be set up for any existing Google Site without having to make any changes to the site. ACA works as follows:

- The ACA spreadsheet generates a Content Submission Form and any content submitted is routed to a selected approver.

- The approver receives an email notification, which they can authorize or reject.

- Once authorized, the ACA spreadsheet writes the new content into the target Site using Apps Script’s Sites services. The new content is then visible to anyone with view permissions in the target site.

| Approver receives an email containing the submitted content |

The content submission URL can be shared to any group or published directly on the target Google Site, which represents the end goal on the workflow diagram below. Content may only be submitted by users that are logged into your domain and content can only be published with Approver sanction.

|

| ACA Workflow Diagram |

Google Apps Script made it easy

One of Google Apps Scripts’ core features is the ability to seamlessly integrate different services together, in our case this was Google Sites and Spreadsheets, but many other services are accessible, such as Mail and Contacts. It was always the aim to make ACA a powerful tool without unnecessary complexity and thanks to Google Apps Script, we have successfully delivered content approval workflow to Google Sites. ACA represents our third off-the-shelf product to be listed in the Google Apps Marketplace.

Posted by John Gale, Solution Developer, Appogee

Want to weigh in on this topic? Discuss on Buzz

Introducing Google Drive and the Google Drive SDK

Editors note: This post is cross-posted from the Google Developers Blog.

Today, were announcing Google Drive—a place where people can create, share, collaborate and keep all of their stuff. Drive is a natural step in the evolution of Google Docs. Drive is built to work seamlessly with other Google applications like Google+, Docs and Gmail, and your app can too. Joining the launch today are 18 web apps that have integrated with Drive using the Google Drive SDK.

Integrating your application with Google Drive makes it available to millions of users. Drive apps are distributed from the Chrome Web Store, and can be used with any modern browser. Plus, your app can take advantage of Googles sharing, storage, and identity management features.

Create and collaborate

Google Drive allows for more than storage. Google Docs is built right into Drive, and your app can join the party. For example, Lucidchart is an online visual diagramming tool integrated with Google Drive. You can start a new Lucidchart or share your diagrams with friends or coworkers straight from Drive, just like a Google document or spreadsheet.

Store everything safely and access it everywhere

With Google Drive you can store all of your files and access them from anywhere. For example, MindMeister, an app for creating mind maps online, also lets you open files from popular desktop mind mapping applications. By integrating with Google Drive, MindMeister users can open their mind maps stored in Drive from any modern browser.

Search everything

Your app can also take advantage of Drives storage, indexing, and document viewers. For example, HelloFax is a web application that lets you sign and fax documents from your browser. HelloFax users can now store all their inbound and outbound faxes in Google Drive, making them easy to find later. Plus, with automatic OCR, users can even search and find text in faxed images. Your application can store files of any type up to 10 GB in size or create file-like shortcuts to your applications data.

Want your application to work with Google Drive? Full documentation on the Google Drive SDK is available at developers.google.com/drive, or if youre itching to start building, head to our Getting Started guide. Our team will be on Stack Overflow to answer any questions you have when integrating your app with Google Drive. You can also bring your questions to our Hangout this Thursday at 10:30 AM PDT / 17:30 UTC.

Look for more posts about working with the Drive SDK on the Google Apps Developer Blog in the coming weeks.

| Mike Procopio profile Mike is a Software Engineer for Google Drive, focusing on all things Drive apps. He gets to leverage his passion for the developer and user experience by working on the next-generation APIs that help unleash Google Drive. Before joining Google in 2010, he was a machine learning researcher, and enjoys engaging in illuminating statistical discussions at every opportunity. |

Dont Etch your User Story Map in Stone

I was having a chat with Adam Yuret last week about user story maps. A concern that he expressed and others have voiced is that by putting your ideas into a user story map it might discourage you from changing the map as you start delivering the stories and learn more information. Hes right - it might and it probably does. Kent Beck recently expressed a similar concern about product roadmaps on twitter.

I was having a chat with Adam Yuret last week about user story maps. A concern that he expressed and others have voiced is that by putting your ideas into a user story map it might discourage you from changing the map as you start delivering the stories and learn more information. Hes right - it might and it probably does. Kent Beck recently expressed a similar concern about product roadmaps on twitter.* How to Create a User Story Map

* How to Prioritize a User Story Map

* Tips for Facilitating a User Story Mapping Session

* Dont Etch your User Story Map in Stone

* User Story Mapping Tool Review – SmartViewApp

* Story Maps - A Testing Tool After All

- Will anyone use it?

- Will anyone pay?

- Does anyone care?

- How will we make $?

- Can we build it?

- Do we understand the problem?

- Will this solve the problem?

- Will it perform adequately?

- Will it integrate with other applications successfully?

- Can we build this with the budget & schedule we have?

- What is the best architecture for this project?

When you build and prioritize your map, you should be doing so with these questions in mind. As you resolve each of those questions, your map should change. It is one of the great reasons to put your map on the wall using post-it notes instead of putting the map into a tool - post-it notes are easy (and fun) to move. (Aside: Yes, there are sometimes excellent reasons to put things into a tool.)

Two quick stories to illustrate:

In summary, "You cannot plan the future. Only presumptuous fools plan. The wise man steers." - Terry Pratchet. Dont etch your user story map in stone - build it with paper and expect to change it as you learn.

Silent Brainstorming

"Cognitive fixation causes people to focus on other peoples ideas and are, inevitably, unable to come up with their own."

"If the goal is to come up with a bunch of unique ideas or solutions to problems, then the group should be split up so that individuals can come up with their own ideas and these ideas can later be combined with other members ideas."

"...a group session after an individual session might be the optimal brainstorming technique."So how can you combine both individual and group brainstorming? Here is an approach that I have been using that Ive put together based on my experiences facilitating and participating in other sessions. This approach can be used for any brainstorming session whether you are trying to generate user stories, ideas for a retrospective, or strategies for your community group.

Step 1: Establish the goal.

Make sure everyone understands the purpose of the brainstorming session. For many sessions this can be communicated to attendees before the meeting begins.

Note: If your group is larger than 10, I would recommend splitting the group up into several smaller groups for steps 2 through 5. The groups can present their best ideas to each other at the end of the exercise and re-open the discussion and voting at that point if appropriate.

Step 2. Individual (and silent) brainstorming.

Step 3. Describe your ideas

Once everyone has finished writing down their ideas, choose one person and ask them to describe their best idea and then place that post-it on the wall or the table. Continue going around the table asking each person to describe their top one idea until all ideas have been presented. It should take several rounds and each person will have the opportunity to present several ideas - one during each round. While the ideas are being described encourage everyone to keep writing additional ideas down. This allows the group to combine and improve upon ideas presented by others.

Step 4. Group the ideas

There are several ways to group the ideas depending on your group size.

There are several ways to group the ideas depending on your group size.a) If your group size is five or less I prefer using silent affinity grouping because it is fast and collaborative. Ask your team to silently group the ideas. Things that are similar to each other should be moved closer to each other. Things that are dissimilar to each other should be moved farther apart. Groups should form naturally and fairly easily. Once again, body language will help you see when they are done - usually 2-3 minutes.

b) If your group size is more than five I prefer to have one person group the post-its as they are presented because it is faster. Simply put the post-it near other post-its with similar ideas.

Once the groups are created you can name each group with a short title.

Step 5. Silent Voting

If you need to vote on the ideas or on the groups, I prefer using silent voting. Number each post-it and then ask each person to write down their top three on a post-it. Once all the votes are in, tabulate the votes to identify the top ideas.

Summary

This method of brainstorming combines the best of both individual and group brainstorming techniques and is consistent with the latest research. However, I initially started using it not because it conforms to the latest research but because it allows everyone to have a voice - the loud people cant dominate the conversation and the quiet people are given a way to contribute. That it reduces the effect of cognitive fixation when generating the initial list of ideas is an added benefit.

References:

- http://www.businessinsider.com/brainstorming-team-building-effectiveness-2012-1

- http://onlinelibrary.wiley.com/doi/10.1002/acp.1699/pdf

- I was videoed by InfoQ talking about Silent Brainstorming at SDEC12. Watch the video here.

More opportunities to Hangout with the Google Apps developer team in 2011

Weve held many Office Hours on Google+ Hangouts over the last two months, bringing together Google Apps developers from around the world along with Googlers to discuss the Apps APIs. Weve heard great feedback about these Office Hours from participants, so weve scheduled a few more in 2011.

General office hours (covering all Google Apps APIs):

- TODAY: December 8 @ 10:00am PST

- December 14 @ 1:30pm PST

- December 20 @ 12:00pm PST

Product-specific office hours:

- Google Apps Marketplace - December 12 @ 12:00pm PST

As we add more Office Hours in the new year, well post them on the events calendar, and announce them on @GoogleAppsDev and our personal Google+ profiles.

Hope you’ll hang out with us soon!

| Ryan Boyd profile | twitter | events Ryan is a Developer Advocate on the Google Apps team, helping businesses build applications integrated with Google Apps. Wearing both engineering and business development hats, youll find Ryan writing code and helping businesses get to market with integrated features. |

Sunday, March 8, 2015

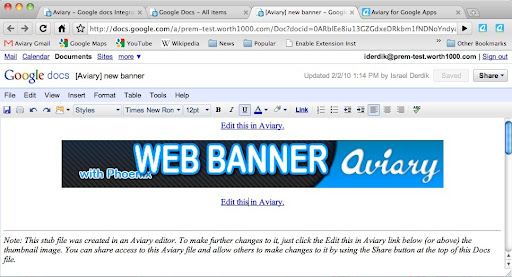

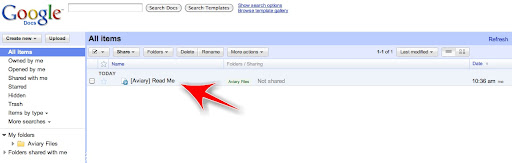

Integrating with Google Docs Aviary experience

Aviary is a multimedia editing suite that lets people edit and retouch images, create logos, markup screen shots and even edit audio. Aviary is available on the Google Apps Marketplace, and can be accessed right from the Google Apps navigation bar.

One of the feature Aviary built as part of this integration with Google Apps was letting users save their Aviary creations to their Google Docs account. Integrating Aviarys suite of editors and their associated files into Google Docs was a terrific challenge for the team. Google provided Aviary with a great initial set of APIs to let Google Apps users navigate to and launch Aviary from within their universal navigation.

Users could retrieve Google Docs files within the Aviary editors once they were launched (via the DocList API). However, Aviary found that although saving the files to Docs was easy, there was no way to associate an Aviary editor to a specific file type (i.e. jpg) and make it launch Aviarys editors directly from inside Googles interface.

Focusing on the User Experience

Aviary wanted a way to make the experience truly seamless for Googles users. Having separate areas to manage Aviary files and Google Docs was a less than ideal user experience. Add in the fact that many more third party apps will be launching in Googles marketplace and the end result would be an organizational nightmare for users trying to remember where all of their files were stored.

Keeping things in one universal interface was imperative, but there was no native API for that yet. So to protect the user experience in the interim, Aviary needed to come up with a novel work around. Fortunately, there was a simple and elegant way.

Because Google does allow creating new native Documents using the DocumentService.Insert method of the DocList API, Aviary was able to create "stub documents" with private references to the actual Aviary document inside of them. Each stub document contains a thumbnail of the final image and a private (but shareable) URL that launches the Aviary editor with the original editable Aviary ".egg" file inside of it (".egg" is our native file format - it seemed apropos for Aviary).

Another benefit of this approach is that it allows Aviary to work with Google Apps Standard accounts which ordinarily do not allow the uploading of arbitrary custom file types, (unlike the Google Apps Premier accounts which do).

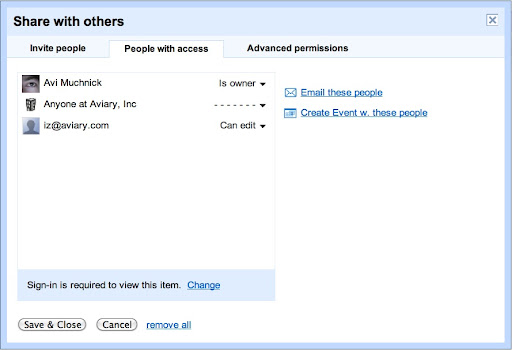

Collaboration Management

Best of all, routing the file through Google Docs means that managing permissions on who can edit and see the document is as simple as managing permissions on any Google Document. Aviary didnt need to reinvent the wheel.

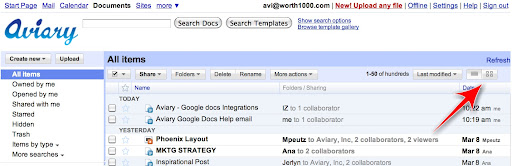

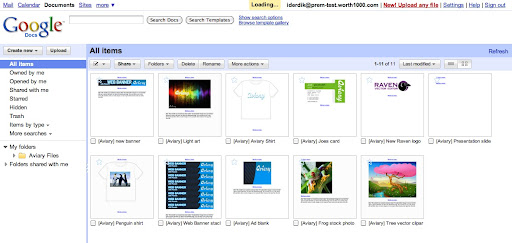

Presentation of Aviary files within Google Docs

Aviary is a suite of design applications that are mostly design oriented although they do have audio editing capabilities as well. This means that most files created in Aviary are visual in nature. Browsing through a list of file names when looking for a visual file can be inefficient - but just by sticking with some of Googles native functionality worked well. Googles Docs list has a little-known Grid view button, which displays Google Docs using a grid of thumbnails.

Grid view gives the user a visual thumbnail preview of the contents of their Google Docs - including the stub documents we made. Perfect for finding Aviary files!

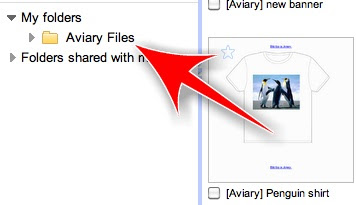

Document Organization

To make things simpler to find and organize, all of the stub files are in an Aviary folder by default. To facilitate file and folder management, Aviary wrapped the Gdata classes provided by Google with an Aviary helper class that provided all of the functionality needed to create folders, upload and replace files. Within this class, Aviary used the DocumentService.Insert method of the DocList API to add an AtomEntry with a category of "folder" to create this base folder.

One additional thing Aviary did was to automatically create a Help File in a users Google Docs list the very first time an Apps user clicks on the Aviary link in Googles universal navigation. This help file contains an overview of the different Aviary editors that are available and some tips on how to use them. Aviary used the same DocumentService.Insert method of the DocList API to create this help document that was used to create the stub documents. Aviary sends the user a one-time summary email with the contents of this help file to make sure they can find the answers they need in the future.

When put all together, we now have a workable application that integrates smoothly with Google apps, giving all Apps users complete access to Aviarys multimedia editing suite. The more developers build on Googles Apps platform, the more useful it is to users, and the more everyones apps are used as a result.

Posted by Don Dodge, Google Apps Team

Want to weigh in on this topic? Discuss on Buzz

Wednesday, March 4, 2015

Software Agents to Assist in Distance Learning Environments

Link to article (By Sheung-On Choy, Sin-Chun Ng, and Yiu-Chung Tsang)

"Software agents can act as teaching assistants for distance learning courses by monitoring and managing course activities

...A number of researchers have proposed the development of software agents in teaching and learning situations. Jafari conceptualized three types of software agents to assist teachers and students:

- Digital Teaching Assistant - assists the human teacher in various teaching functions

- Digital Tutor - helps students with specific learning needs

- Digital Secretary - acts as a secretary to assist students and teachers with various logistical and administrative needs"

This paper discusses a successful software agent pilot implementation of the first type in four Open University of Hong Kong (OUHK) distance learning courses. The Digital Teaching Assistant carried out the following tasks:

- "Send alert e-mails to inactive students (those who have not accessed the course Web site for a long period of time). The course coordinator decides the length of the inactive period and instructs the software agent to send the alert e-mails based on the established time.

Send e-mails to inform tutors about inactive students and advise tutors to have proactive consultation with those students. - Send e-mail alerts to those students who have not downloaded a particular piece of course material or who have not read an important piece of course news since it was uploaded to the file server. This helps prevent students from missing information or forgetting to download an important item, such as an assignment file.

- Help the course coordinator keep track of students? progress, and send e-mails to the coordinator and tutors about those students whose performance is at a marginal level.

- Retrieve information from the course timetable and send reminder e-mails to students. For example, it might send a reminder to students five days before an assignment due date and one day before a face-to-face session.

- During the period when assignments are submitted, the agent will monitor the assignment submission status and send e-mails after the due date to those students who have not submitted the assignment. It will also inform the course coordinator and tutors about those students."

In general, these tasks consume a lot of human effort for unintelligent information retrieval and processing, and with an agent such as the one mentioned above a lot of time can be saved on routine tasks, and more time can be spent on more productive activities, such as facilitating a more engaging online learning environment and content development.

A lot of lessons can be learned from this excellent article . Also, with more creativity and research I suppose the software agent can do a lot more stuff to minimize all the routine time wasting stuff most lecturers or facilitators hate to do.

Where is my R&D software agent to do my routine stuff ?